Is it possible to peel back the layers of reality with a single click? The rise of "undress AI" technology is not just a technological advancement; it's a Pandora's Box that challenges our understanding of privacy, consent, and the very fabric of digital ethics. This controversial innovation offers the capability to digitally remove clothing from images using sophisticated artificial intelligence, sparking a fierce debate about its potential uses and abuses.

These platforms employ intricate deep learning algorithms, most notably Stable Diffusion and Generative Adversarial Networks (GANs), to generate altered versions of uploaded images. The AI meticulously attempts to recreate the areas hidden by clothing, producing images that simulate nudity with alarming realism. The user experience is often streamlined, promising ease of use and quick results. However, this ease of access masks a complex web of ethical and legal considerations that demand careful examination.

| Category | Information |

|---|---|

| Technology | AI-powered image modification |

| Algorithms Used | Stable Diffusion, Generative Adversarial Networks (GANs) |

| Primary Function | Digitally remove clothing from images |

| Applications | Fashion design, virtual fitting rooms, digital art |

| Ethical Concerns | Privacy violations, non-consensual image manipulation, potential for misuse |

| Legal Implications | Varying laws on image manipulation, defamation, and revenge porn |

| User Base | Tech enthusiasts, fashion designers, everyday users |

| Popular Platforms | Undress AI apps, Unclothy, Clothoffbot |

| Controversies | Ethical debates, concerns over deepfake technology, potential for harassment |

| References | Electronic Frontier Foundation (EFF) |

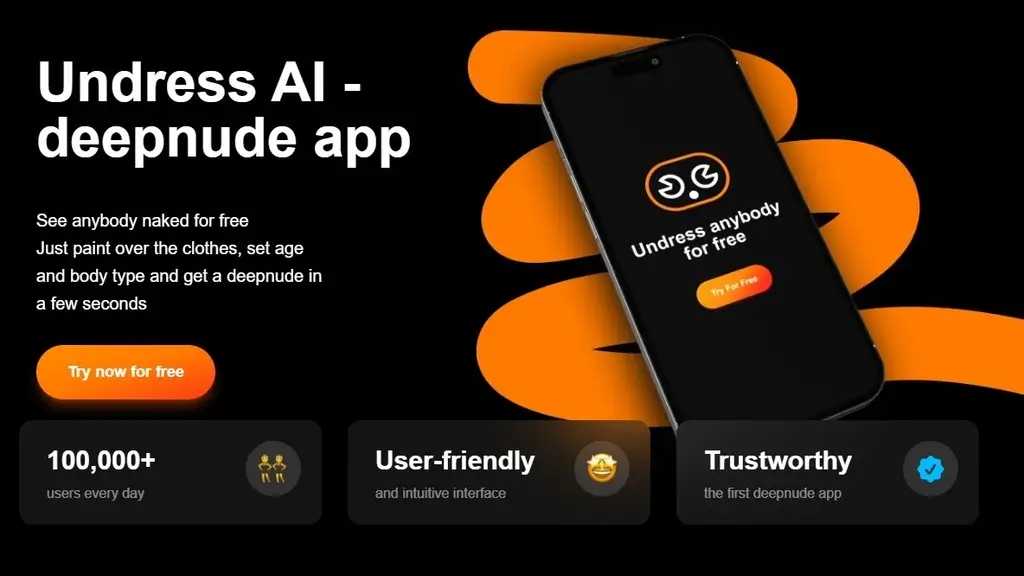

The marketing of these "undress AI" services often emphasizes versatility and user-friendliness. Platforms boast a range of style options, from suits and lingerie to bikinis and more, suggesting the ability to "transform and modify character outfits with ease." Promoters highlight how a user can simply input character descriptions like gender, body type, pose, and the instruction to "remove cloth" into a prompt box, and the AI will automatically generate images based on those parameters. This almost playful presentation obscures the fact that the technology is capable of creating deeply disturbing and potentially illegal content.

- Decoding Sone 436 The Viral Video Phenomenon Explained

- Unleash Your Desires Free Porn Sex Chat Explored

The rise of "undress AI" apps has undeniably captured public attention. Tech enthusiasts are fascinated by the underlying algorithms and their capacity for realistic image manipulation. Fashion designers see potential applications in virtual prototyping and visualizing designs on different body types. Even everyday users are drawn in by the novelty and the promise of easy image alteration. This widespread curiosity, however, does not negate the inherent dangers of such technology. The line between harmless experimentation and harmful misuse is dangerously thin.

Proponents often point to applications like fashion design and virtual fitting rooms as legitimate uses for "undress AI." The idea is that designers could use the technology to visualize clothing on virtual models, eliminating the need for physical prototypes. Similarly, consumers could use virtual fitting rooms to "try on" clothes without actually undressing. While these applications may seem benign, they raise concerns about body image, unrealistic beauty standards, and the potential for misuse. For example, could retailers use AI to subtly alter images of models, creating an even more unattainable ideal for consumers?

Unclothy, for instance, is marketed as an AI tool specifically designed to "undress photos." By leveraging advanced AI models, users can upload images, and the tool claims to automatically detect and remove clothing, generating so-called "deepnude images." The promise of "generating realistic results quickly and safely" is a blatant attempt to normalize a technology that is anything but safe. The creation and distribution of deepnude images can have devastating consequences for victims, leading to emotional distress, reputational damage, and even legal repercussions.

- Jackerman 3d Animation Insights Challenges Teamwork

- Unlock The Magic 5 Movie Rules Shaping Cinema In 2024

The developers of these platforms often emphasize the speed and efficiency of their AI, promising users the ability to "start generating realistic results quickly and safely." This focus on convenience and accessibility further lowers the barrier to entry, making it easier for malicious actors to exploit the technology. The reality is that there is nothing "safe" about creating and distributing non-consensual nude images. The potential for harm far outweighs any perceived benefits.

In an era where technology and privacy intersect in increasingly complex ways, "undress AI" emerges as a project that directly challenges the boundaries of digital art, privacy, and consent. The very act of transforming clothed images into nude representations is fraught with ethical implications. While some might argue that it's a form of artistic expression, others see it as a blatant violation of privacy and a tool for harassment and exploitation. The debate is far from settled, and it requires a nuanced understanding of the technology and its potential impact.

This technology leverages the power of artificial intelligence (AI) to transform clothed images into nude representations, sparking both awe and controversy. The ability to create realistic nude images from clothed photographs raises fundamental questions about the nature of consent and the right to control one's own image. Is it ever ethical to create a nude image of someone without their explicit permission? What safeguards can be put in place to prevent the misuse of this technology?

"Undress AI" is marketed as an advanced AI application designed to modify images to create the illusion that individuals are unclothed. The underlying technology relies on sophisticated algorithms that analyze the shape and texture of clothing, then attempt to "fill in" the areas underneath with realistic-looking skin and body parts. This process requires a significant amount of computing power and advanced machine learning techniques. However, the ethical considerations are often overlooked in the rush to showcase the technology's capabilities.

The accuracy of these tools is constantly improving, making it increasingly difficult to distinguish between real and AI-generated nude images. This poses a significant threat to individuals, particularly women, who may find themselves the victims of non-consensual deepfakes. The spread of these images can have a devastating impact on their lives, leading to emotional distress, reputational damage, and even physical harm.

The claim that these tools "accurately" alter photographs is a gross misrepresentation of the reality. While the technology may be able to create visually convincing images, it is still prone to errors and inaccuracies. The AI may misinterpret the shape of the body, leading to distorted or unrealistic results. Moreover, the AI is trained on vast datasets of images, which may contain biases that are reflected in the generated images. This can lead to the perpetuation of harmful stereotypes and the objectification of women.

The resurgence of "undress AI" apps has once again thrust this controversial technology into the spotlight. The ease with which these apps can be used, combined with the potential for misuse, makes them a significant threat to privacy and safety. It is crucial that individuals are aware of the risks and take steps to protect themselves from becoming victims of non-consensual deepfakes.

Fashion designers, while intrigued by the potential applications of "undress AI," must also be aware of the ethical implications. Using the technology to create virtual prototypes is one thing, but using it to alter images of models without their consent is a clear violation of their rights. The fashion industry has a responsibility to promote ethical practices and to avoid using technology that could be used to exploit or harm individuals.

The promise of "virtual fitting rooms" powered by "undress AI" is another area of concern. While the idea of trying on clothes virtually may seem appealing, it raises questions about data privacy and security. How will these virtual fitting rooms protect users' personal information? What safeguards will be put in place to prevent the misuse of the images that are generated?

The underlying technology allows users to "virtually undress images," providing a range of potential applications, from fashion design to virtual fitting rooms. However, this convenience comes at a cost. The ease with which images can be manipulated raises serious concerns about the potential for misuse, including the creation and distribution of non-consensual deepfakes. It is crucial that users are aware of the risks and take steps to protect themselves from becoming victims of this technology.

The marketing of these apps often downplays the ethical and legal implications, focusing instead on the ease of use and the potential for creative expression. However, the reality is that "undress AI" is a powerful tool that can be used to inflict significant harm. It is essential that users are aware of the risks and that developers take steps to prevent the misuse of the technology.

The existence of tools like Unclothy, which are specifically designed to "undress photos," highlights the disturbing trend towards the normalization of non-consensual image manipulation. These tools leverage advanced AI models to automatically detect and remove clothing, generating what are euphemistically called "deepnude images." The very term "deepnude" is a misnomer, as it attempts to sanitize a practice that is inherently exploitative and harmful.

The claim that users can "start generating realistic results quickly and safely" is a blatant lie. There is nothing "safe" about creating and distributing non-consensual nude images. The potential for harm far outweighs any perceived benefits. These tools should be treated with extreme caution and used only with the explicit consent of all individuals involved.

The developers of these tools often attempt to deflect criticism by claiming that they are simply providing a technology and that it is up to users to use it responsibly. However, this argument is disingenuous. The very design of these tools encourages misuse and makes it easy for malicious actors to create and distribute harmful content. Developers have a responsibility to consider the potential consequences of their technology and to take steps to prevent its misuse.

The emergence of "undressai" as a "bold project" that "challenges the boundaries of digital art, privacy, and consent" is a dangerous and misleading characterization. While it may be true that the technology is pushing boundaries, it is doing so in a way that is harmful and unethical. The project is not simply exploring the limits of digital art; it is creating a tool that can be used to exploit and harass individuals.

The transformation of clothed images into nude representations is not a neutral act. It is a deliberate attempt to strip individuals of their privacy and dignity. The fact that this is being done with the aid of artificial intelligence does not make it any less harmful. In fact, it may make it even more so, as it allows for the creation of realistic deepfakes that can be difficult to detect.

The claim that "undress AI" is an "advanced AI application" designed to "modify images accurately" is another example of the misleading marketing that surrounds this technology. While the AI may be sophisticated, it is not infallible. The images that it generates are often inaccurate and distorted, and they can perpetuate harmful stereotypes. Moreover, the very act of modifying images without consent is a violation of privacy and a form of digital assault.

The claim that this tool "leverages sophisticated techniques to alter photographs accurately" is a gross exaggeration. While the technology may be able to create visually convincing images, it is still prone to errors and inaccuracies. The AI may misinterpret the shape of the body, leading to distorted or unrealistic results. Moreover, the AI is trained on vast datasets of images, which may contain biases that are reflected in the generated images. This can lead to the perpetuation of harmful stereotypes and the objectification of women.

The renewed popularity of "undress AI" apps is a disturbing trend that should be met with concern and outrage. These apps are not simply harmless novelties; they are tools that can be used to inflict significant harm. It is crucial that individuals are aware of the risks and that steps are taken to prevent the misuse of this technology.

The fact that fashion designers are among those who are interested in "undress AI" apps is particularly troubling. While it may be tempting to use the technology to create virtual prototypes or to visualize designs on different body types, it is essential to consider the ethical implications. Using "undress AI" to alter images of models without their consent is a clear violation of their rights and a form of digital exploitation.

The promise of "virtual fitting rooms" powered by "undress AI" is another area of concern. While the idea of trying on clothes virtually may seem appealing, it raises questions about data privacy and security. How will these virtual fitting rooms protect users' personal information? What safeguards will be put in place to prevent the misuse of the images that are generated?

The ability to "virtually undress images" may seem like a harmless novelty, but it has the potential to cause significant harm. The ease with which images can be manipulated makes it easy for malicious actors to create and distribute non-consensual deepfakes. It is crucial that users are aware of the risks and that developers take steps to prevent the misuse of this technology.

The marketing of these apps often downplays the ethical and legal implications, focusing instead on the ease of use and the potential for creative expression. However, the reality is that "undress AI" is a powerful tool that can be used to inflict significant harm. It is essential that users are aware of the risks and that developers take steps to prevent the misuse of the technology.

The existence of tools like Clothoffbot, a "revolutionary deepnude telegram ai bot engineered for undressing photos with ai," is a clear indication of the extent to which this technology has become normalized. The fact that this bot is being offered for free, with the promise of "fast and discreet" service, is particularly disturbing. It suggests that the developers are actively trying to encourage the use of the technology for malicious purposes.

The promise of a "free, fast, and discreet" service is a blatant attempt to lure unsuspecting users into using the bot for harmful purposes. The fact that the bot is being offered on Telegram, a platform known for its lax enforcement of content moderation policies, further increases the risk of misuse.

The instructions to "upload your photo to the bot and witness the magic unfold as it skillfully removes clothing" are a chilling reminder of the ease with which this technology can be used to violate privacy and dignity. The use of the word "magic" is particularly offensive, as it attempts to sanitize a practice that is inherently exploitative and harmful.

The promotion of "clothoff ai generator" by simply requiring users to "input character descriptions like gender, body type, remove cloth, pose etc in the prompt box" and then automatically rendering "unique images based on your prompts" is a dangerous simplification of a complex ethical issue. This approach reduces individuals to mere data points, ignoring their inherent dignity and right to control their own image.

The phrase "best ai clothes remover" is misleading because it implies that there is a legitimate use for this technology. The reality is that any tool that is designed to "edit out unwanted elements from clothing photos" and "undress subjects to create nude images" is inherently problematic and should be treated with extreme caution.

The surge in popularity of "apps and websites that use artificial intelligence to undress women in photos" is a disturbing trend that should be met with strong condemnation. The fact that "in september alone, 24 million people visited undressing" is a stark reminder of the scale of the problem and the urgent need for action.

The term "undressing" itself is a euphemism that attempts to sanitize a practice that is inherently exploitative and harmful. The reality is that these apps and websites are facilitating the non-consensual creation and distribution of deepfakes, which can have devastating consequences for victims.

The various platforms employing these technologies share a common thread: they lower the barrier to entry for creating and distributing non-consensual intimate images. This accessibility, coupled with the increasing realism of the AI-generated content, creates a perfect storm for abuse. The legal and ethical frameworks surrounding image manipulation are struggling to keep pace with these rapid technological advancements.

The debate surrounding "undress AI" highlights the urgent need for a broader societal conversation about digital ethics, consent, and the responsibility of technology developers. We must demand greater transparency and accountability from those who create and distribute these technologies. Furthermore, we need to educate the public about the risks of deepfakes and the importance of protecting their own digital privacy.

Ultimately, the future of "undress AI" will depend on how we choose to regulate and utilize this powerful technology. Will we allow it to be used as a tool for exploitation and abuse, or will we find ways to harness its potential for good while safeguarding individual rights and dignity? The answer to this question will shape not only the future of digital art but also the future of our society.

- Movierulz The Truth About This Piracy Site Risks Legality

- Hikaru Nagi Sone 436 The Latest Updates Mustknow Facts